The Ethical Path to AI: Navigating Strategies for Innovation and Integrity

Why take a course about AI ethics?

The "Ethical Path to AI" certificate is a 6 week online, asynchronous, and hands-on, training program which empowers you to:

- Lead with Confidence: Build Trust Through Ethical AI.

- Mitigate Risk, Maximize Opportunity: Ethical AI for Business Success.

- Get Stakeholder Satisfaction: Deliver Ethical AI Solutions.

- Develop Ethical Advantage: Outperform Competitors with Responsible AI.

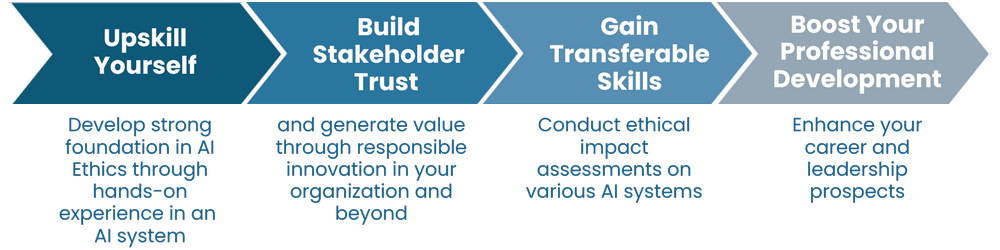

- Upskill Yourself - develop strong foundation in AI Ethics through hands-on experience in an AI system.

- Build Stakeholder Trust and generate value through responsible innovation in your organization and beyond.

- Gain Transferable skills - conduct ethical impact assessments on various AI systems.

- Boost Your Professional Development - enhance your career and leadership prospects

What to Expect?

Course Overview:

- Duration: 6 weeks plus orientation.

- Structure: Combines video lectures from renowned experts, assigned readings, and practical projects.

- Focus: Hands-on learning and practical application of AI ethics with weekly assignments and personalized feedback from the instructor.

- Final touch: Conclude the program with an actionable ethical risk assessment plan for your AI system.

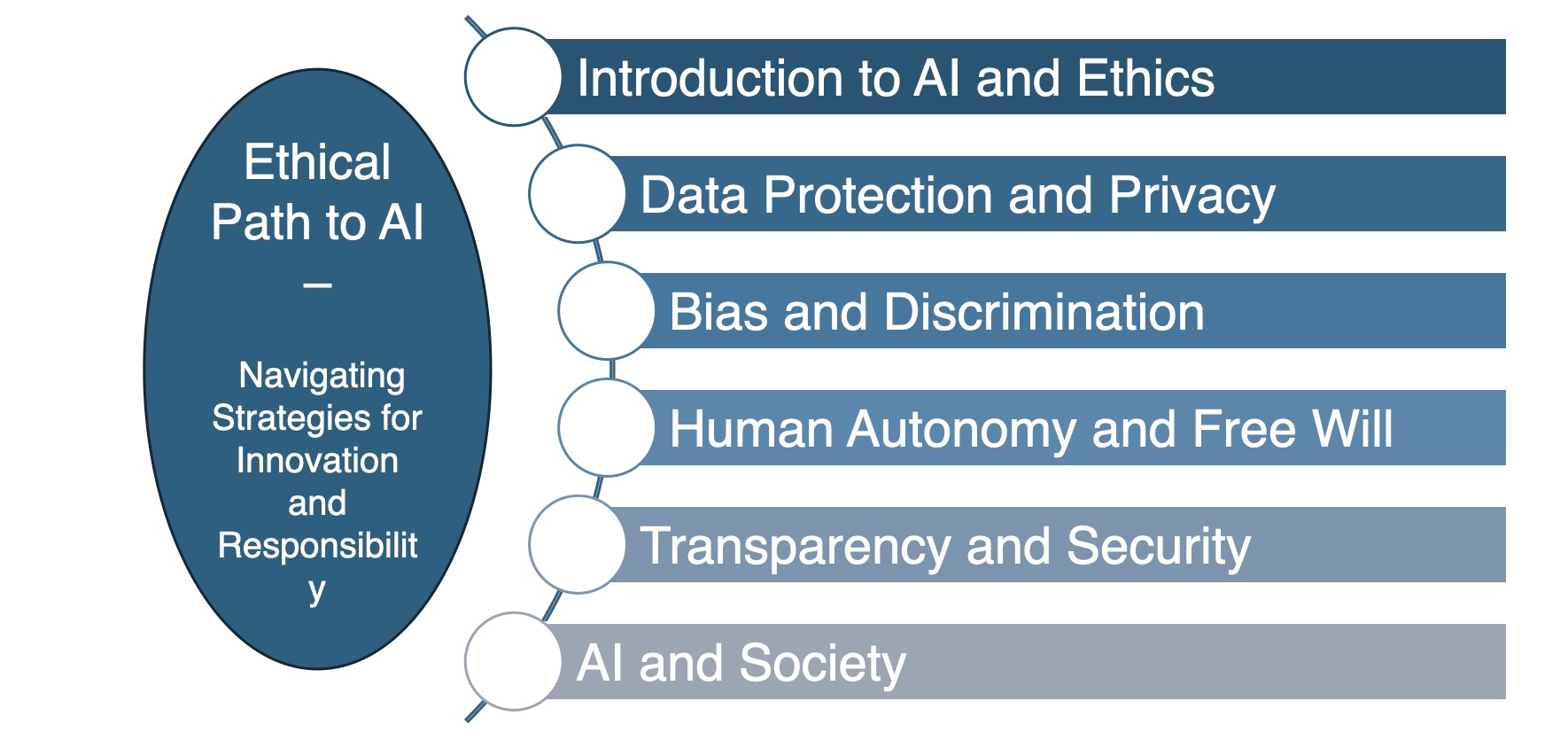

Ethical Path to AI: Navigating strategies for Innovation and responsibility. - Introduction to AI and Ethics - Data Protection and Privacy - Bias and Discrimination - Human Autonomy and Free will - Transparency and Security - AI and society

Course Breakdown:

- Week 1: Introduction to the course, selection of an AI system for in-depth analysis.

- Weeks 2-5: Deep dive into the chosen AI system, applying ethical frameworks and principles.

- Week 6: Culmination with an ethical impact assessment of the selected AI system.

- Cost: USD $1,450

Program Badge

Feature your expertise through an official digital badge from Emory. These are issued at completion and can be displayed in your online channels like Linkedin.

Featuring world renowned experts:

Dr. Paul Root Wolpe Dr. Anne-Elisabeth Courrier Dr. Ifeoma Ajunwa | Dr. Jinho Choi Dr. Maarten Lamers Dr. Naomi Lefkovitz | Dr. Carissa Veliz Oxford University Dr. Serena Villata Institut 3IA Côté d'Azur Dr. Gloria Washington Howard University Dr. Lance Waller Emory University Dr. Bryn Williams-Jones University of Montreal |

Frequently Asked Questions

No, you don't need any specific knowledge about AI systems or ethics. Module 1 is dedicated to introductions to these topics.

Each module is organized for 6 to 7 study hours – including the assignments.

The internal structure of each module is the same and entails five units:

- Unit 1: Introduction with a self-assessment of the level of proficiency on the topic

- Units 2, 3, 4: units with content, videos, and reading lists

- Unit 5: Assignments and self-assessments

- In each module, you must participate in (asynchronous) discussions to interact with other students and use your knowledge and skills in a specific context.

- Then, depending on the module, you are expected to prepare individual short essays, slide presentations, and short question exercises, all dedicated to and related to the AI systems you selected in module 1.

You should choose an AI system that has a direct connection with human beings (i.e., not an AI system used for weather predictions or agriculture).

The reading lists are organized at two levels: the compulsory reading list and the optional reading list

The platform includes a calendar with friendly reminders of the deadlines.

You can request up to two extensions as long as you contact the Program Manager.

No, this program is non-credit. You will receive a certificate of completion.

Contact: acourri@emory.edu for further information.

This certificate is offered by the Emory Center for Ethics with support from Emory Continuing Education and the Emory Center for AI Learning.

The Emory Center for Ethics, as one of the largest, most comprehensive ethics centers in the United States, is a leader in advancing humanity with ethical AI. The Center helps students, professionals, and the public confront and understand ethical issues in health, technology, the arts, the environment, and the life sciences. We teach, publish, and offer programming that includes public engagement as part of our commitment to respond to issues and concerns important not just to the academic community but to the Atlanta community and beyond.